S3 Input

Etleap supports ingesting data from files stored in S3.

Source Setup

In order for Etleap to access data in your S3 bucket, you will need to setup a role, which can be assumed by Etleap and attach a policy, which enables access of your S3 bucket.

Step 1. Create a New Role

- Go to the IAM section of the AWS console .

- Click Create role, select Trusted Entity Type AWS account and then choose Another AWS account.

- Under Account ID, for a hosted account, enter

223848809711. For VPC deployments enter the AWS account ID where the instance is deployed. The Account ID can also be found in the S3 Input connection setup under Role → Click to show instructions. - Check the box that says Require External ID. Enter the ID provided in the instructions dropdown within the Role section of the Etleap connection setup page.

- Skip adding permissions for now. Click Next until you reach the Review page.

- Enter a name for the role.

- Click Create role.

Step 2. Attach a Policy to the Role

- Find the role in the IAM role list in the AWS console and click it.

- In the Permissions tab, click on Add permissions and then select Add inline policy.

- Click the JSON tab.

- In the statement below, substitute:

- Your bucket name for

<INPUT_BUCKET>. - Any subfolder in the bucket for

<CUSTOM_PREFIX>. - The intermediate bucket name set up previously for

<INTERMEDIATE_BUCKET>.

{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Action": ["s3:GetObject", "s3:ListBucket"], "Resource": ["arn:aws:s3:::<INPUT_BUCKET>/<CUSTOM_PREFIX>/*"] }, { "Effect": "Allow", "Action": "s3:ListBucket", "Resource": "arn:aws:s3:::<INPUT_BUCKET>", "Condition": { "StringLike": { "s3:prefix": "<CUSTOM_PREFIX>*" } } }, { "Effect": "Allow", "Action": "s3:*", "Resource": ["arn:aws:s3:::<INTERMEDIATE_BUCKET>", "arn:aws:s3:::<INTERMEDIATE_BUCKET>/*"] } ] } - Your bucket name for

- Copy and paste this JSON into the textbox. Then click Review policy.

- Enter a descriptive Name of your choice, e.g.

etleap_minimal_access_policy. - Click Create policy

After creating a role with a policy that allows Etleap to read files from your S3 bucket, you can proceed with creation of S3 connection in Etleap connection setup page.

What Data is Available?

Any data stored in your bucket can be ingested through Etleap as long as it is one of the Supported Types.

For compressed file formats only a single file per archive is currently supported.

How Data is Updated

Depending on your use case, you can configure the pipeline to use any of the modes supported for file sources.

Files that Change

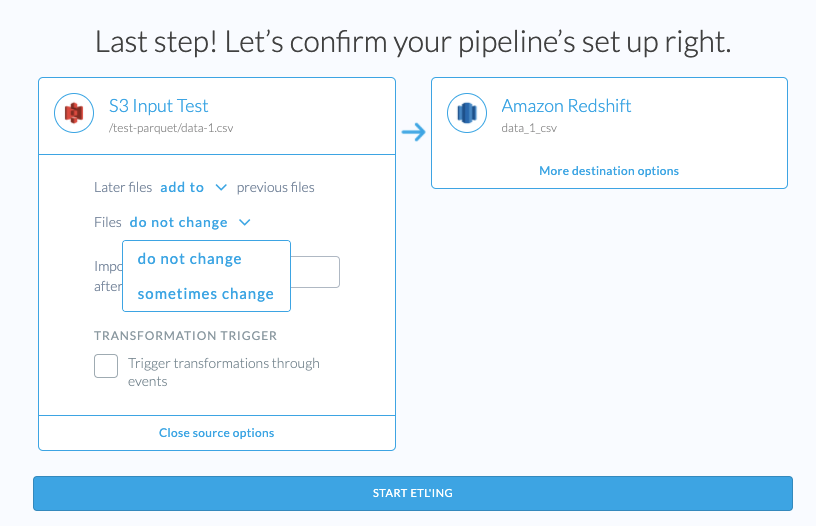

When the Later files add to previous files option is used during pipeline creation for S3 Input sources, Etleap also provides the choice of reprocessing files that have changed.

- Files do not change means that Etleap will not check whether a file has changed in the source and will only process files with new paths.

- Files sometimes change means that Etleap will check whether files that were already processed have changed. If the file has changed, Etleap fetches the new file, removes the old file’s data from the destination, and adds the changed data. (Note, old data is only removed from warehouse destinations.) This option adds a new column

file_path_hashto the destination table which it uses to identify records that came from an old file.

To detect new and changed files, Etleap uses S3 ETags and file paths. New files are detected by their S3 path while changed files are detected by comparing the ETag with the cached ETag from the previous version of the file.

S3 File Listing

Once the pipeline is created, Etleap uses an optimized listing cache to discover new files in the specified S3 location.

Archived Files

While attempting to Wrangle a sample of data, you might get an error message stating that the file you are trying to Wrangle is in an archived state. This will occur if your file is in one of the following storage classes :

- Amazon S3 Glacier Flexible Retrieval (Formerly S3 Glacier)

- Amazon S3 Glacier Deep Archive

- Amazon S3 Intelligent-Tiering (Archive Access or Deep Archive Access Tiers)

Files which are always in an archived state that requires they be retrieved asynchronously (Amazon S3 Glacier Flexible Retrieval and Amazon S3 Glacier Deep Archive) will be automatically filtered out, making them unavailable for ingestion and sampling in the Wrangler.

In order to continue Wrangling, you must first restore the object by following the instructions here . To speed up an in-progress restore request, following the instructions here .