Delta Lake Destination Settings

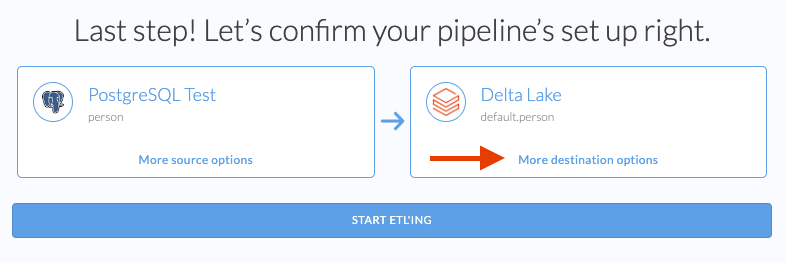

This page describes all of the destination options available when using Databricks Delta Lake as a pipeline destination in Etleap. These options are shown at the last step of creating a pipeline.

Primary Key Mapping

Choose the column(s) that represent the unique identifier for a record in the destination. You may need to change this if you added transformations in the Wrangler that rename or alter the primary key column(s). Etleap uses the primary key for deduplicating data when loading. This option is shown if Etleap detects that there are primary keys in the source. Specifying primary keys is required to create pipelines in Append, Update and Update with History Retention mode.

Last Updated Mapping

Choose the column that represents when a record was last updated in the source. You may need to change this if you added transformations in the Wrangler that rename or alter the last-updated column. Etleap uses the last-updated mapping for ensuring that it doesn’t load outdated data. This option is not available if Etleap does not detect any timestamp columns or if the source entity does not support Update Mode.

Support readers before Databricks Runtime 10.2

Delta Lake tables created by Etleap have column mapping enabled by default.

Column mapping enables schema changes to be applied to the table using ALTER TABLE statements in Databricks which are an efficient way of changing the tables columns without needing to rewrite the underlying parquet files.

However, any tables that use column mapping cannot be read by Databricks clusters with a runtime version earlier than 10.2.

Enabling the Support readers before DBR 10.2 option in the UI, or the pre10Dot2RuntimeSupport in the API , disables column mapping for all tables created by the pipeline, and allows the tables to be read by older Databricks runtimes.

Enabling this option introduces the following limitations

- Schema changes will result in the table’s underlying parquet files being rewritten, which can be slow.

- Schema changes will not preserve any column constraints such as NOT NULL on the destination tables.

Quality Check

When quality checks are enabled for a pipeline, Etleap will wait to receive a QUALITY_CHECK_COMPLETE event before loading data to the destination.