Schema Changes

Pipelines in Etleap are schema-aware. Certain source types are aware of externally-defined schemas which describe the shape of the data that is read during the extraction phase. All Etleap pipelines also implicitly define the schema for the pipeline destination through the transformation script which is specified in the Wrangler.

Changes to these externally-defined schemas and destination schema are handled by updating the transformation script and notifying all users that the pipeline has been shared with. Pipelines can be configured to update the transformaiton script automatically, or require user approval.

The destination schema changes, resulting from updating the script, are applied incrementally as Data Definition Language (DDL) operations when possible, otherwise a pipeline refresh is triggered and the destination table is rebuilt with the new schema.

This article describes the different types of schema changes that Etleap handles and how pipelines and transformation scripts are affected.

Types of Schema Changes in Etleap

Changes to the Source Schema

Etleap is aware of changes in external schemas for database sources and Salesforce connections. During the extraction phase, any changes to an external schema that Etleap tracks results in a schema change event for pipelines extracting from that schema. See Handling Schema Change Events.

Etleap can detect whether a column has been added or dropped for these types of schemas.

| Type of Change | Detected |

|---|---|

| Added column | Yes |

| Dropped column | Yes |

| Renamed column | Yes (as a drop and an add) |

| Type changes | No |

Changes to the Shape of the Data

During the transformation phase for a pipeline, the raw extracted data is altered by a series of transform steps into a set of columns that are loaded to the pipeline destination. Therefore, selecting steps for the transformation script defines an inferred schema that is used for the destination table.

Some transform steps are able to detect additional columns in the data that were not known when creating the transformation script, even when there is no external schema defined in the source. These steps can add new columns to this inferred schema while the pipeline is running by adapting the script. See Handling Schema Change Events.

An example of this is the Flatten JSON object transform which is able to detect any number of additional JSON properties that may appear throughout the lifetime of the pipeline. When a new JSON key appears in the data, the pipeline handles this as a schema change.

| Type of Change | Detected |

|---|---|

| Added Column | Yes |

| Dropped Column | No |

| Renamed Column | No |

| Type Changes | No |

Supported Changes to the Data’s Shape

Changes to the data shape are only captured for JSON data. For other plain-text formats such as CSV, Etleap is unable to detect when a column has been added, renamed or removed.

If the file format changes for a pipeline, you will need to do the following:

- Ensure that the previous files in the source have also been updated to the new schema. The data may get corrupted if the schema is inconsistent between files. Visit the FAQ here for more information and a potential workaround.

- Return to the Wrangler for this pipeline.

- Refresh the sample by clicking the refresh icon so you get the latest version of the file.

- Edit the number of columns in the Parse CSV step.

- Click Update Script. The pipeline will automatically refresh with the new script, reprocessing all old data.

However, until the script has been updated according to the steps above, any new columns will not be added to the destination and any dropped columns will remain in the destination with null values going forward.

The Promote First Row to Header transform is able to support out-of-order columns in a plain-text file by using the header row to identify the column rather than the column order. This will gracefully ignore new columns without causing data integrity issues.

Note: Any transforms applied to the header row sequentially after the Promote First Row to Header transform (e.g., column rename steps), will not impact the naming of columns in the schema that was defined by the Promote First Row to Header transform.

Changes made to the Script by the User

Any changes the user makes to the transformation script may also result in a schema change for the destination. Most script changes trigger a pipeline refresh because the nature of the change means that historic data needs to be reprocessed. For example, changing the value of a column may affect rows already loaded to the destination.

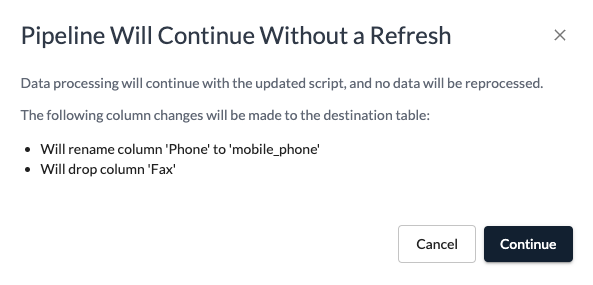

However, if a change to the pipeline script only consists of column renames and drops, the pipeline will continue processing data without refreshing, and the DDL operations will be applied to the destination table when the load phase occurs. No special handling of the script is necessary here as with changes to the Source Schema or the Shape of the Data.

For these changes to not require a refresh, the new rename and drop steps must be added to the end of the old script. No existing steps can be changed, and the types of the columns must all remain the same.

| Type of Change | Can continue without refreshing |

|---|---|

| Added Column | No |

| Dropped Column | Yes |

| Renamed Column | Yes |

| Type Changes | No |

Handling Schema Change Events

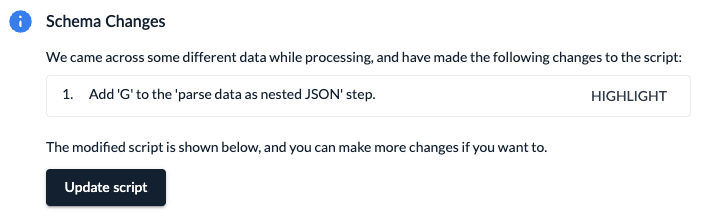

When the source schema or the shape of the incoming data changes for a pipeline, the transformation script must be updated to reflect the new schema. Etleap generates an adapted version of the script which reflects the changes to the columns.

| Added Columns | New columns are included in the adapted script by the transform step that interprets the column from the input data. For example:

|

| Dropped Columns | Columns that are dropped from external schemas will also be removed from the adapted transformation script when the schema change event is handled by the pipeline. Any references to the columns will be removed from all steps in the adapted script, and any steps that only act on dropped columns will be removed from the script entirely. |

Pipelines can be configured to either immediately and automatically apply the adapted script to the pipeline, or to stop and require manual approval from the user.

Automatic Schema Changes

When a pipeline is configured for Automatic Schema Changes, schema change events will result in the transformation script being updated automatically. All users that the pipeline is shared with will be notified of the schema change. A notification is also sent to the Notification Email and AWS SNS Topic, configured in Admin Console → Notifications → Outbound Notification Settings. The pipeline will continue processing data beyond the schema change without requiring a refresh.

Manual intervention is required if the schema change causes the Wrangler to output more than the maximum supported number of columns (2000). In this case, the pipeline owner and all shared users will be notified that the pipeline has been stopped due to exceeding the column limit.

Manual Schema Changes

When a pipeline is configured for Manual Schema Changes, the pipeline will stop when it encounters a schema change and notify all shared users that manual intervention is required. The pipeline can be resumed by resolving the schema change in the Wrangler.

When opening the Wrangler for a pipeline that is stopped for schema changes, Etleap displays the adapted transformation script which reflects the newly added or dropped columns. The changes can be accepted by clicking Update Script. The pipeline will then continue processing data without refreshing the pipeline.

The user also has the opportunity to amend the adapted script before accepting the changes. The script can be changed in the following ways without requiring a refresh:

- New steps can be added to the end of the generated script, as long as they do not modify or remove columns that were not added by the schema change.

- Existing steps in the generated script cannot be modified, removed, or reordered.

Any changes made to the script that do not follow these two rules will result in the pipeline refreshing, as data will need to be reprocessed to handle the script change. A message will be displayed when clicking Update Script that will indicate whether a refresh will be triggered or not, and confirmation is required before the script changes are saved.

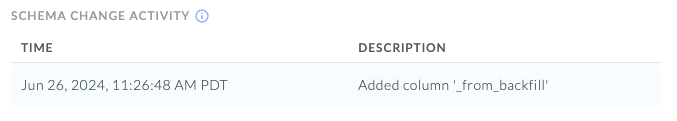

Viewing the Schema Change History for a Destination Table

For pipelines that load to warehouse destinations, any changes to the destination table’s schema can be viewed on the pipeline page under Settings → Destination → Schema Change Activity.

Any changes to the destination columns that have been applied since the table was created are displayed here. The time that the destination table was updated is also displayed. Pending indicates that the change exists in the latest transformation script but has not yet been applied to the destination table, which will occur during the load phase.

When a pipeline is refreshed, the destination table is replaced and the schema change history is reset.

Permanently Stopped Activities

Pipelines only maintain a single lineage of transformation script versions. To prevent branches in the script history from forming, only the latest script may be changed:

- When a script is manually edited in the Wrangler, the latest script for the pipeline is always shown.

- When a script is automatically adapted for a schema change, only the latest script may be adapted.

As pipelines run refreshes concurrently to the existing activities, a refresh that is caused by a script change will be using the newer version of the script while the activities prior to the script change will still be active and using the old version of the script.

This is usually reconciled when the refresh completes and the activities using the old script are stopped and deleted. However, if a schema change is detected by the activities using the old script, the pipeline will be unable to handle the schema change as a newer script exists (and is being used by the refresh).

The activities using the old script will be permanently stopped until the refresh completes and replaces the stopped activities.

Refreshes are guaranteed to always use the latest version of the script, so a schema change encountered by a pipeline’s refresh activities can always be handled.

FAQ

What changes can be made in the Wrangler without causing a refresh?

- When the only changes to the script are new Rename

columnor Dropcolumnsteps.- For this to not require a refresh, the new rename and drop steps must be added to the end of the old script and no existing steps can be changed.

- Types cannot change.

- See Schema Changes from Script Updates by User

- When new columns are added to the schema.

- When a schema change occurs Etleap will generate a new script by adapting the existing script to the schema changes.

- For this to not require a refresh, the script Etleap generates must be used.

- New steps can be added to the end of this generated script before submitting it, but the new steps must not modify or remove columns that were not added by the schema change.

- Existing steps in the generated script cannot be removed, modified, or reordered.

- See Manual Schema Changes

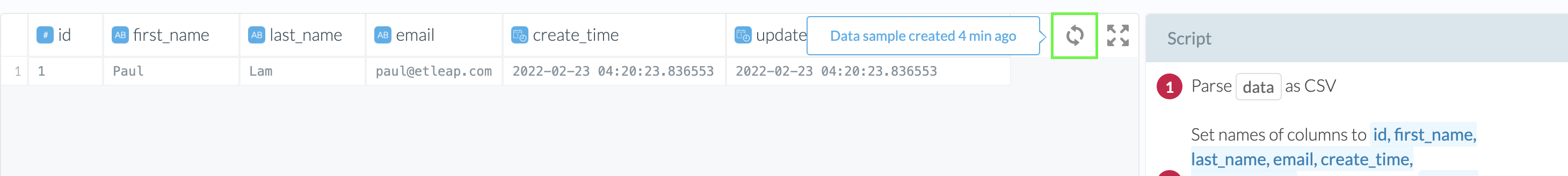

Why is the schema shown in the Wrangler out of date?

The samples shown in the Wrangler are cached by Etleap. A fresh sample can be fetched by clicking the refresh button in the top-right of the Wrangler interface. Hovering over this button will display how recently the current sample was taken.

When refreshing the sample for database source pipelines, the latest schema is fetched from the source.

For Salesforce sources, the schema is only refreshed when the pipeline runs its extraction phase. Ensure that the pipeline is active, unpaused, and has recently run its extraction according to its Update Schedule.

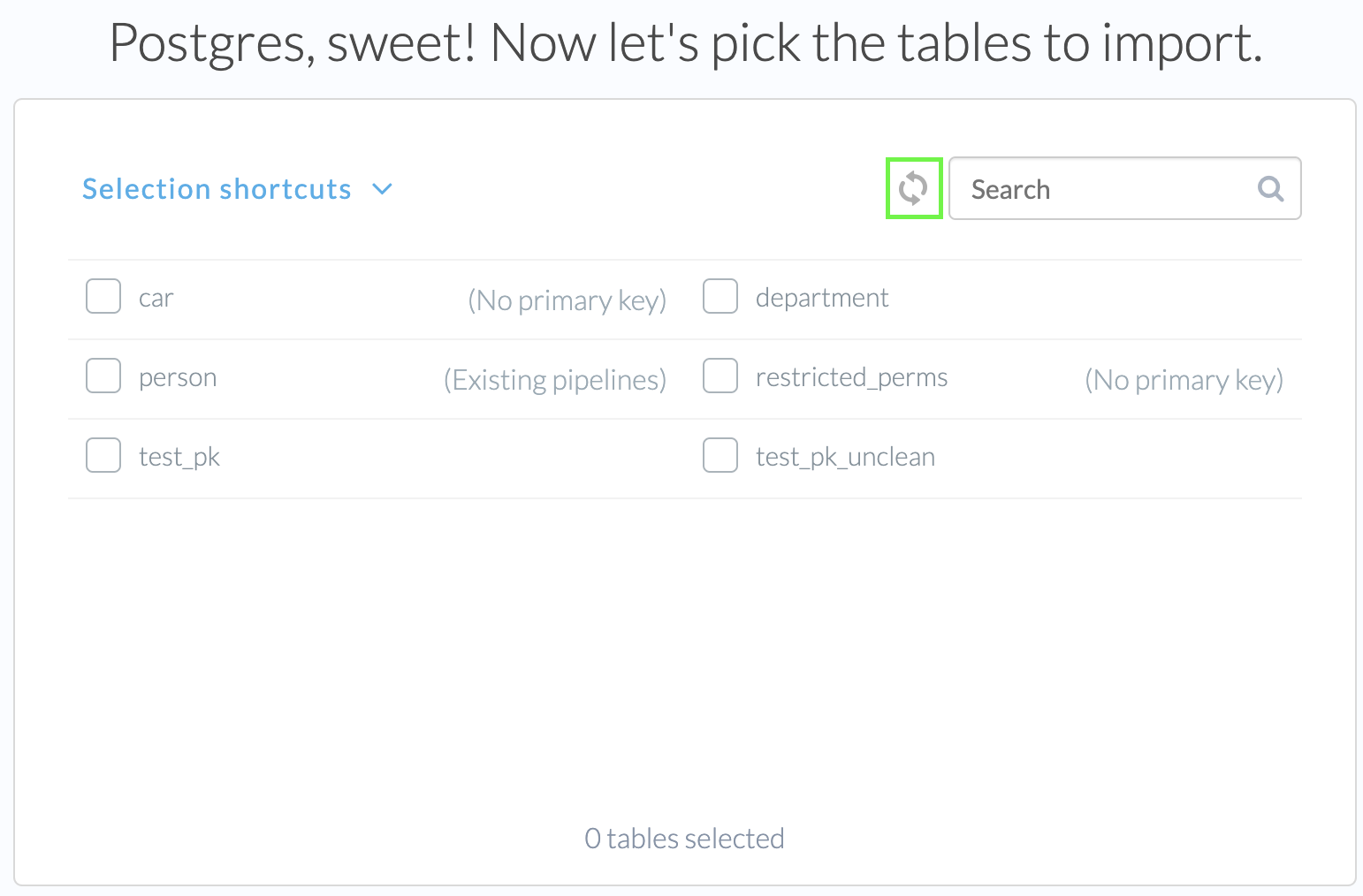

For new pipelines, external schemas are also refreshed when re-fetching the table list which can be done by clicking the refresh button in the top-right of the File Picker.

Why doesn’t my Wrangler CSV sample have all of the columns that exist in newer files?

For CSV pipelines, the Wrangler selects the largest file by file size as the sample that your script will be created against. If there are smaller files that are wider (i.e., have more columns) in the source, these additional columns will not be identified.

If your files meet the following criteria, you may be able to implement a workaround to ensure the Wrangler is identifying a schema from the widest file:

- You have CSV files in a directory where some files have fewer columns than others

- The CSV file that is being used in the Wrangler sample does not contain all of the available columns

- At least one of the CSV files contains all of the available columns

- You do not expect there to be future files that contain new columns

If your scenario satisfies all of the above conditions, you can create a pipeline that includes all of the columns by following these steps:

- Identify the CSV files that contains all of your available columns

- Make this the only file in the directory designated by your pipeline’s path

- Create your script in the Wrangler, you should see all available columns in the sample

- Once the pipeline is created, move your other files to the directory that contains your original file

Please Note: If future files are added to this directory with columns that are not in your original Wrangler sample those columns will not be added to the pipeline’s schema and will be missing from your destination table.