How a Pipeline Works

This article describes the details of how exactly a pipeline syncs data, after the pipeline has been created. For instructions on how to set up a pipeline, see How to Create a Pipeline.

Overview

This section provides a high-level description of the phases that a pipeline goes through after being created.

The pipeline begins in the Catch-up Phase and gets all the data in the source as quickly as possible.

-

The pipeline fetches and processes the first batch of data.

-

Starting from the watermark of the previous batch, the pipeline keeps getting batches until it has gotten all of the data.

-

For Update Mode pipelines, it gets the batch of data that was updated since the pipeline started.

The pipeline is now considered caught-up and switches to the Incremental Phase.

- From now on, every time the update schedule is triggered, the pipeline fetches the next batch starting from the previous watermark.

That’s the basics of how a pipeline operates. The following sections explains the details of how the various parts work.

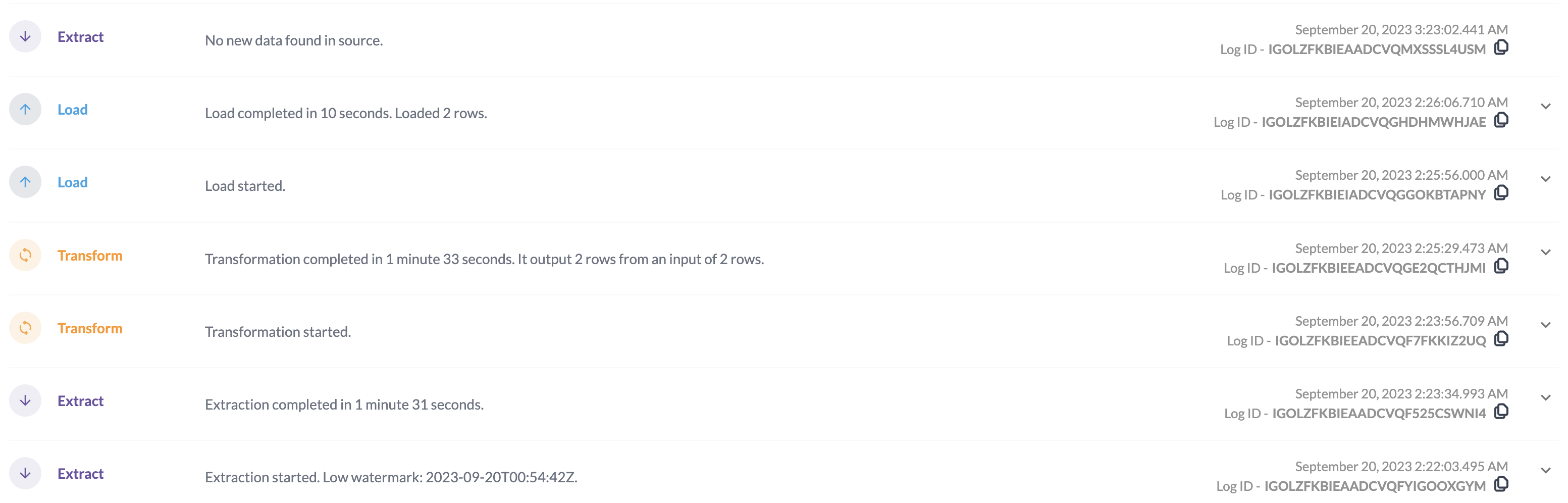

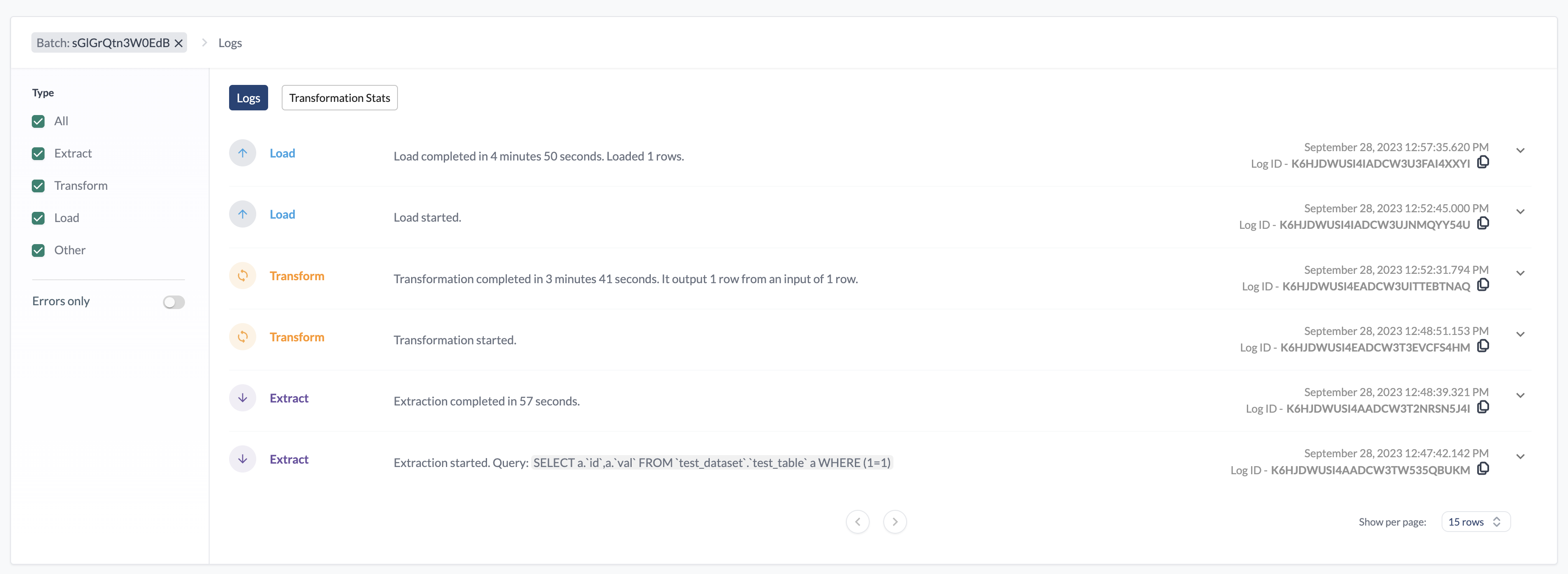

Batch

The pipeline processes data in batches. Each batch does the following:

-

Extract a subset of data from the source, starting from the watermark of the previous batch, or the beginning of the dataset if this is the first batch.

-

Transform the batch data according to the pipeline’s script.

-

Load the transformed data into the destination.

File-based sources skip the Extract step and instead start directly at the Transform step.

The sections below describe each step in detail.

High Watermark

The high watermark describes which data is included in the batch. Specifically, the batch has the data where the watermark values are less than or equal to the high watermark.

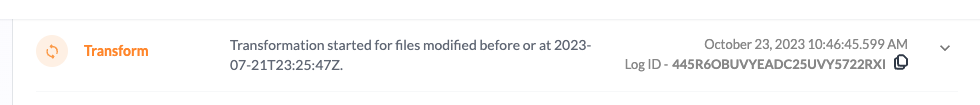

For file based sources, the high watermark is shown when a transformation is started. If the pipeline is event based, its high watermark will be the external batch ID of the event processed as part of the batch.

Extraction

For all sources except for file-based and stream-based sources, a new batch begins by extracting the data from the source. The exact process for this varies by source, but typically involves querying the database or making API calls.

The extraction process fetches records that are greater than the previous watermark. The maximum batch size depends on the source type.

Transformation

The transformation step applies the script defined in the Wrangler to the batch data. The files that get transformed depends on the source type:

-

For source types that use extractions, the pipeline always transforms all the data retrieved during the extraction.

-

For source types that don’t use extractions, the pipeline transforms files that have been modified since the previous watermark, in order of increasing modified date. The maximum size for a batch is 32 GB or 4096 files.

Load

Finally, the pipeline loads the transformed batch data into the destination. The exact process for this differs by destination type and pipeline mode, but generally has the following steps:

- If this is the first load, create the destination table.

- If the script has changed, apply any pending schema changes.

- Load the batch data into a staging table.

- Remove any outdated data in the destination table.

- Add the batch data into the destination table.

Catch-up Phase

The pipeline begins in the Catch-up Phase, which processes all the data available for the source. Depending on the source type and the amount of data in the source, the catch-up might happen over several batches to avoid overloading the source or using too much API quota.

- For Database Sources, the primary key is used as the watermark. The batch size is 2,000,000 records for SQL database and 50,000 for MongoDB.

- For some API sources, the watermark is the creation date. The data is batched by month.

Incremental Phase

Once the pipeline has processed all the data in the source, the pipeline switches to the Incremental Phase. Now the pipeline only runs at the interval defined by its update schedule. An incremental batch contains all the data that has been updated since the previous watermark, unless the maximum batch size is reached. If no new data is discovered, then the pipeline does not start any processing.